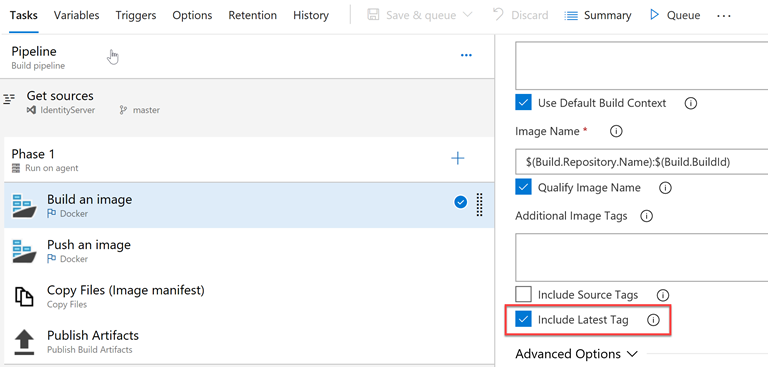

This time I’d like to talk about enhancing existing configuration in Azure DevOps, so we can properly deploy new images to Kubernetes cluster in Azure. By default, when you create a new build pipeline, you don’t include any additional tags and check ‘Include Latest Tag’ checkbox in the task:

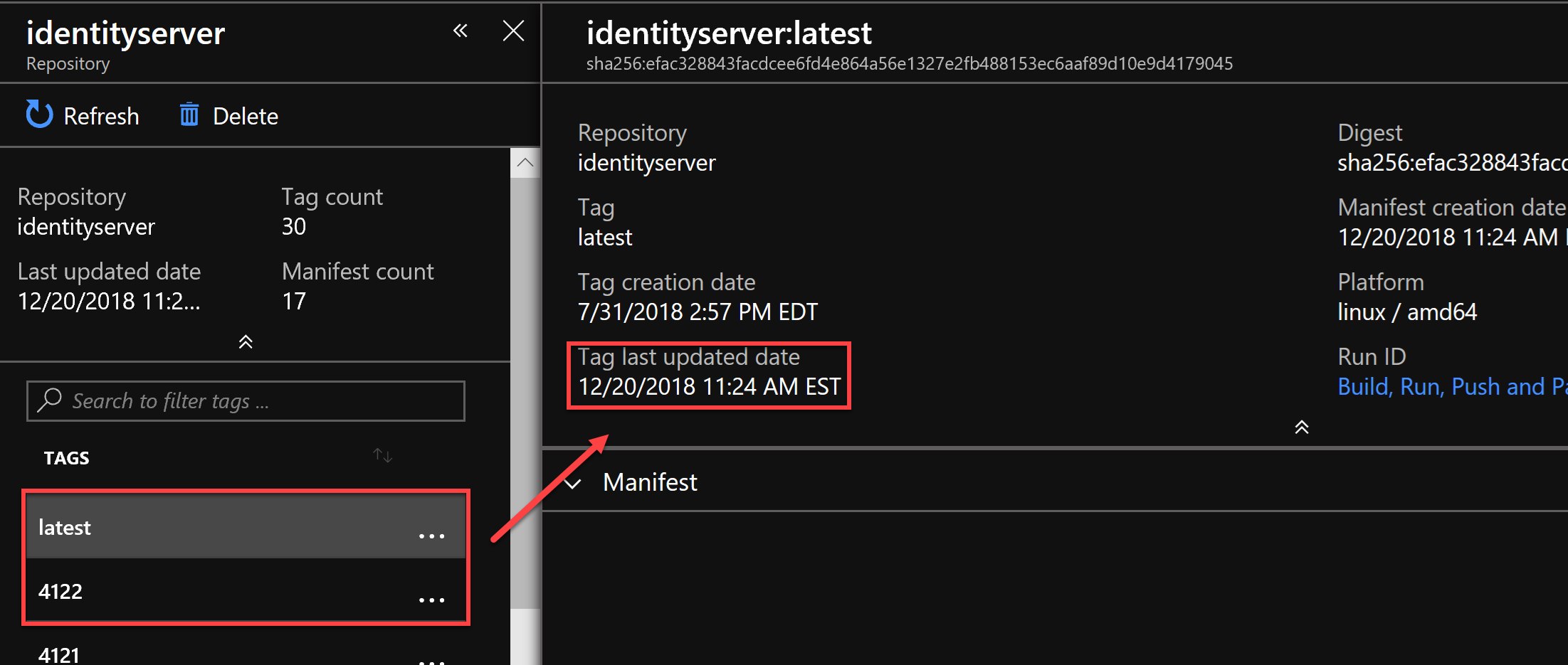

It will work, and in the Azure Container Registry (ACR) you will receive two images, where the one will be tagged with the Build number and another with ‘Latest’ tag:

You may observe that they both have the same date and creation time. The problem with this approach is that once you update the image, the ‘kubectl set’ command does not pull it from the repository, as it doesn’t understand ‘:latest’ tag. For kubectl, the image with the tag that remains the same hasn’t been changed, so there is nothing to pull. This topic is widely discussed on GitHub here and here. The flow is simple:

- There is an existing Deployment with image myimage:latest

- The user builds a new image myimage:latest

- The user pushes myimage:latest to the Container Repository/Registry

- The user wants to do something here to tell the Deployment to pull the new image and do a rolling-update of existing pods

The issue is that there is no existing Kubernetes mechanism which properly covers this. Specifying ‘imagePullPolicy: Always’ in the manifest (*.yaml file) makes no effect on the current issue, the Pod remains intact.

So, what does the official documentation says on the matter?

According to Kubernetes documentation:

The default pull policy is IfNotPresent which causes the Kubelet to skip pulling an image if it already exists. If you would like always to force a pull, you can do one of the following:

- set the imagePullPolicy of the container to Always

- omit the imagePullPolicy and use: latest as the tag for the image to use

- omit the imagePullPolicy and the tag for the image to use

- enable the AlwaysPullImages admission controller

NOTE: you should avoid using ‘:latest’ tag, see Best Practices for Configuration for more information.

There is not a word about the resolution of the issue, although, if you dig this topic a little bit, you will discover that to make Kubernetes to re-pull the image you can:

- Kill existing Pod and K8s will automatically pull the latest one from the repository

- Update the manifest file with annotation or label along with the source code you commit. The manifest update will cause the image re-pull from the repo

- Version your images properly and make sure that semantic tagging used in K8s has proper image version in the tag. By doing this, it would be ‘clearer’ for K8s that the version in the tag has changed and is now different from the one it was spinning in the Pod and it will re-pull it during the release process (using ‘set’ command mentioned before).

Well, needless to say, that the first two bullet points are the lousy ones and have nothing to do with automation. Besides, using the ‘:latest’ tag does not support rollback scenarios.

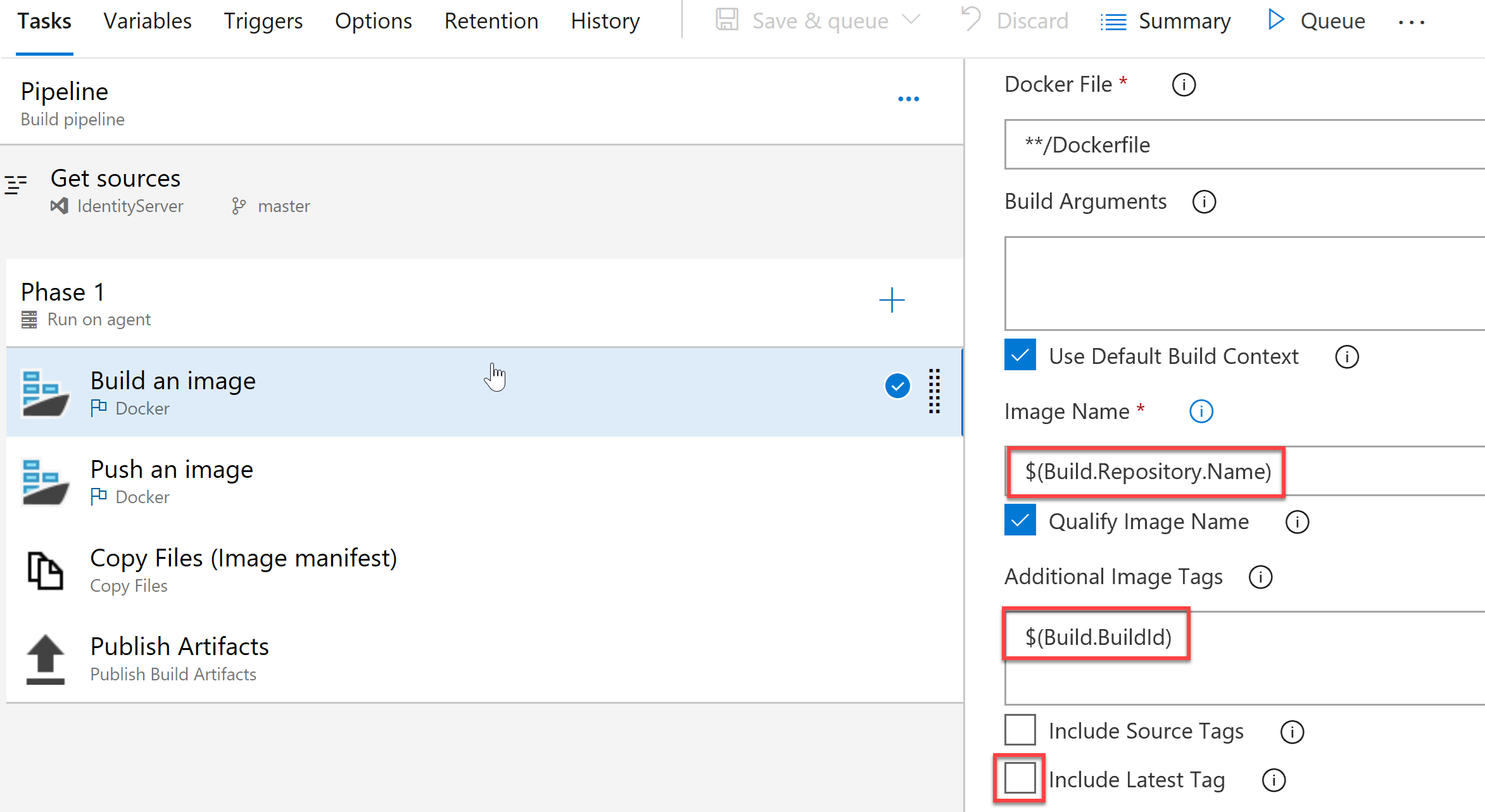

The approach using Azure DevOps is twofold. First, you need to update your build pipeline, so it assigns the version tag to the image that will be pushed to the registry. Normally, build version is used for the purpose (although, you’re free to go with custom versioning option). In our case, the updated build step would look like the following:

As you can see, ‘Include latest tag’ is unchecked, and we use $(Build.BuildId) in the ‘Additional Image Tags’ section. So, once our repo name is ‘IdentityServer’ and for instance, the build number is ‘4125’, it will result in the image name ‘identityserver:4125’.

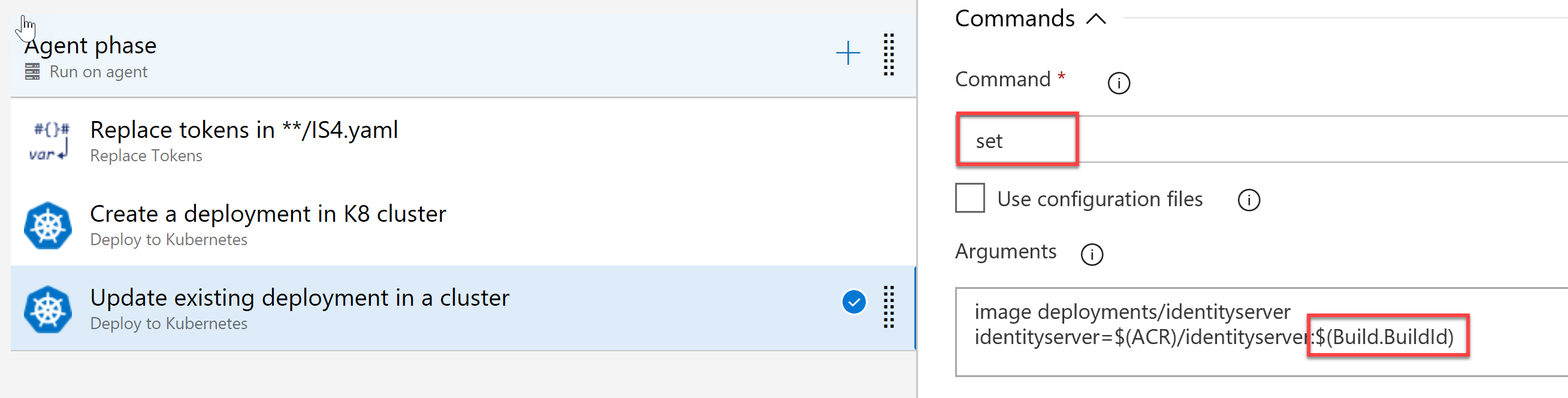

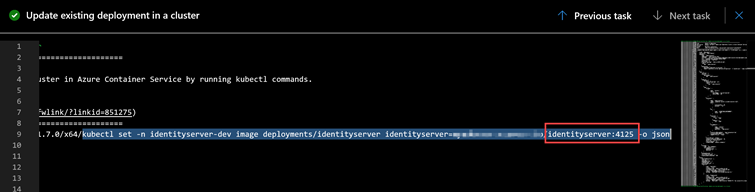

The next step is to update our release pipeline in a way we supply proper command-line arguments for the ‘set’ command (again, as in the case with build task we’ll be using build version):

So, having this task in mind, the command would look like as the following:

kubectl set image deployments/identityserver identityserver=$(ACR)/identityserver:$(Build.BuildId)

where $(ACR) is taken from the environment variables and $(Build.BuildId) is supplied by the build pipeline and being kept in memory until the process complete.

Now it’s time to run and check the results. As we can see from the log file, everything worked as expected:

The K8s dashboard also confirms that the age of the Pod has changed.

Wrapping up

When dealing with such a system as containers orchestration platform along with the process automation, there are tons of different nuances you have to keep in mind. Proper tagging strategy is definitely one of them, along with the variables parametrization and secrets usage we have discussed in the previous article. I hope this topic has helped you to understand this technology better and be one step closer to being called a Kubernetes expert. I’ll keep posting the new gotchas around Kubernetes and DevOps every next Friday. Stay tuned!

Comments