As an Architect and consultant, I try to broaden my knowledge horizon by learning something new. Getting the new certifications and keeping those I already have up to date is an essential part of my job. This article is devoted to the Google Cloud Platform (GCP) and respective certification, i.e., Professional Cloud Architect. I began my journey with the set of courses form Coursera. The beauty of this resource - you join and learn for free. All you need is already included, it means that even the labs you perform are the part of the training you sign up to. The story outlined here is supplemented with quizzes (and answers) for self-check.

The list of courses you might be interested in (in terms of preparation):

- Google Cloud Platform Fundamentals: Core Infrastructure

- Essential Cloud Infrastructure: Foundation

- Essential Cloud Infrastructure: Core Services

- Elastic Cloud Infrastructure: Scaling and Automation

- Elastic Cloud Infrastructure: Containers and Services

- Reliable Cloud Infrastructure: Design and Process

Let's get started!

General info

-

On-demand self-service (no human intervention is required to provide you the resources)

-

Broad network access (accessible from anywhere in the world)

-

Resource pooling (resource sharing for customers)

-

Rapid elasticity (get more resources quickly)

-

Measured service (pas-as-you-go)

-

Co-located services (user-provided, managed, and maintained on ISP site)

-

Virtualized environment (User-configured, provider-managed, and maintained). This one has been used mainly on-premise

-

Google carries as much as 40% of the world's internet traffic. Google's network is the largest of its kind on Earth

-

GCP datacenters are 100% carbon-neutral

-

Google is one of the world's largest purchasers of renewable energy

-

Google's datacenters were the first to achieve ISO 14001

-

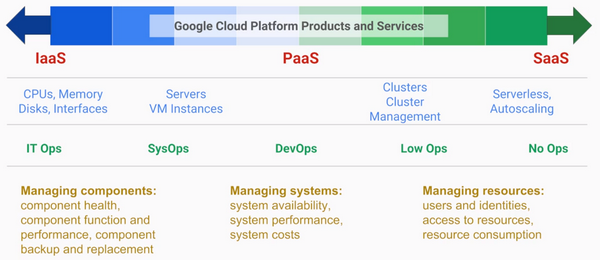

Compute

-

Storage

-

Networking

-

BigData

-

Machine Learning

-

Cloud platform console (Web)

-

Cloud SDK (CLI, i.e., Command Line Interface)

-

Mobile app for Android & iOS

-

REST-based API

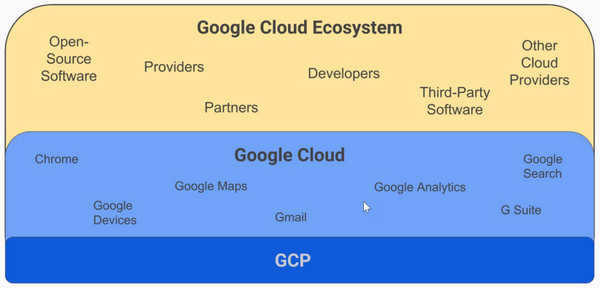

Google Cloud Platform

Security notes

-

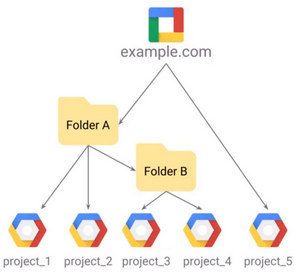

Service and APIs in GCP are enabled on a 'per-project' basis

-

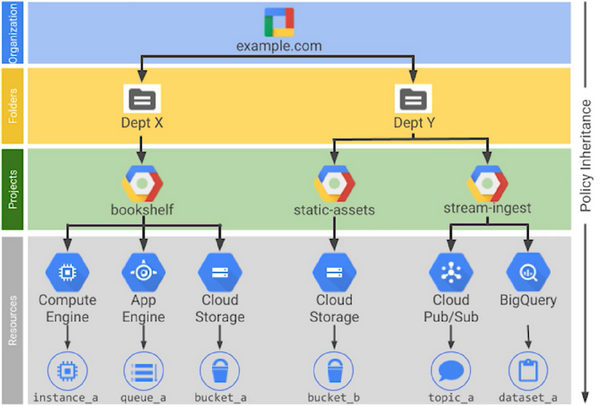

If two or more projects have multiple policies to share, it's better to put them into the folder and assign policies to it (less error-prone way)

-

The Organization node is the top of the hierarchy. It organizes the projects

-

If you have a G-Suite domain, the GCP project will automatically belong to a G-Suite domain (otherwise, you can use GCP identity to create one)

-

The 'Project creator' role defines who can spend money in GCP

-

In GCP, resources inherit policies from parent

-

Good to remember that a less restrictive parent policy overrides more restrictive

-

GCP and IAM (Identity Access Management) defines 'Who,' 'can do what' and 'on which basis.'

-

The 'who' part is defined by Google account, Service account, Google group, Cloud identity, or G-Suite domain

-

There are three kinds of roles in IAM:

-

Primitive

-

Predefined

-

Custom

-

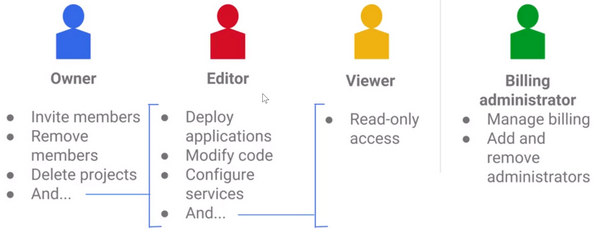

IAM Primitive roles offer fixed, coarse-grained levels of access:

-

IAM predefined roles apply to a particular GCP service in a project (and answers the question: can do what on Compute engine resources in this project, or folder or org., etc.)

-

Service accounts control server-to-server interactions (i.e., to authenticate from one service to another and to control privileges used by resource)

-

Example: Service account has been granted Compute instance an 'InstanceAdmin' role. This will allow an application running within that VM to create, modify and delete other VM's

-

Associated objects with services and billing

-

Contains network (quota max is 5)

Interacting with GCP

Quiz:

-

True or False: In Google Cloud IAM, if a policy gives you Owner permissions at the project level, your access to an individual resource in the project may be restricted to View by applying a more restrictive policy to that resource. False

-

True or False: All Google Cloud Platform resources are associated with a project. True

-

Service accounts are used to provide which of the following? (Choose all that are correct. Choose 3 responses.).

-

A way to restrict the actions a resource (such as a VM) can perform

-

A way to allow users to act with service account permissions

-

Authentication between Google Cloud Platform services

-

How do GCP customers and Google Cloud Platform divide responsibility for security? Google takes care of the lower parts of the stack, and customers are responsible for the higher parts.

-

Which of these values is globally unique, permanent, and unchangeable, but chosen by the customer? The project ID.

-

Consider a single hierarchy of GCP resources. Which of these situations is possible? (Choose all that are correct. Choose 3 responses.)

-

There is an organization node, and there is at least one folder.

-

There is an organization node, and there are no folders.

-

There is no organization node, and there are no folders.

-

What is the difference between IAM primitive roles and IAM predefined roles? Primitive roles affect all resources in a GCP project. Predefined roles apply to a particular service in a project.

-

Which statement is true about billing for solutions deployed using Cloud Marketplace (formerly known as Cloud Launcher)? You pay only for the underlying GCP resources you use, with the possible addition of extra fees for commercially licensed software.

Virtual Private Cloud (VPC) Network

-

Pick memory and CPU. Use predefined types or make a custom one

-

Pick GPUs (Graphics Processing Unit) if you need them (for the workloads like Machine Learning)

-

Pick persistent disks: standard or SSD

-

Pick a boot image: Linux or Windows

-

Run a startup script if you need one (software pre-installation)

-

VPN: Secure multi Gbps connection over tunnels

-

Direct peering: Private connection between you and Google for your hybrid cloud workloads

-

Carrier peering: Connection through the largest partner network of service provides

-

Dedicated interconnect: Connect N X 10G transport circuits for private cloud traffic to Google cloud at Google POPs

-

Has no IP address range

-

Is global and spans all available regions

-

Contains subnetworks

-

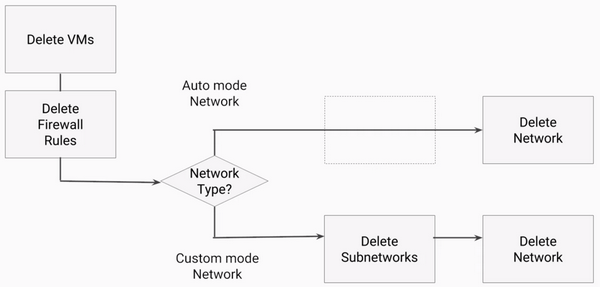

Can be of type default, auto mode or custom mode (auto mode network can be converted to custom, but once custom, always custom)

-

The hostname is the same as the instance name

-

FQDN is [hostname].c.[project-id].internal

-

Example: guestbook-test.c.guestbook-151617.internal

-

Provided as part of Compute Engine

-

Configured for use on instance via DHCP

-

Provides answer for internal and external addresses

NOTE: Any feature marked "BETA" has no Service Level Agreement (SLA)

Quiz:

-

True or False: Google Cloud Load Balancing allows you to balance HTTP-based traffic across multiple Compute Engine regions. True

-

Which statement is true about Google VPC networks and subnets? Networks are global; subnets are regional

-

An application running in a Compute Engine virtual machine needs high-performance scratch space. Which type of storage meets this need? Local SSD

-

Choose an application that would be suitable for running in a Preemptible VM. A batch job that can be checkpointed and restarted

-

How do Compute Engine customers choose between big VMs and many VMs? Use big VMs for in-memory databases and CPU-intensive analytics; use many VMs for fault tolerance and elasticity

-

How do VPC routers and firewalls work? Google manages them as a built-in feature

-

A GCP customer wants to load-balance traffic among the back-end VMs that form part of a multi-tier application. Which load-balancing option should this customer choose? The regional internal load balancer

-

For which of these interconnect options is a Service Level Agreement available? Dedicated Interconnect

-

What is a key distinguishing feature of networking in the Google Cloud Platform? Network topology is not dependent on address layout

-

What are the three types of networks offered in the Google Cloud Platform? Default network, auto network, and custom network

-

What is one benefit of applying firewall rules by tag rather than by address? When a VM is created with a matching tag, the firewall rules apply irrespective of the IP address it is assigned

-

What is the purpose of Virtual Private Networking (VPN)? To enable a secure communication method (a tunnel) to connect two trusted environments through an untrusted environment, such as the Internet

-

Why might you use Cloud Interconnect or Direct Peering instead of a VPN? Cloud Interconnect and Direct Peering can provide higher availability, lower latency, and lower cost for data-intensive applications

-

What is the purpose of a Cloud Router, and why does that matter? It implements a dynamic VPN that allows topology to be discovered and shared automatically, which reduces manual static route maintenance

-

How does the autoscaler resolve conflicts between multiple scaling policies?

-

The following command enables autoscaling for a managed instance group using CPU Utilization: gcloud compute instance-groups managed set-autoscaling example-managed-instance-group --max-num-replicas 20 --target-cpu-utilization 0.75 --cool-down-period 90 Which of the following statements correctly explains what the command is creating?

-

Which statement is true of autoscaling custom metrics.

GCP Storage options

-

Cloud Storage

-

Cloud SQL

-

Cloud Spanner

-

Cloud Data Store

-

Google Big Table

-

Regional (Most frequently accessed, 99.95%). Good for content storage and delivery.

-

Multi-regional (Accessed frequently with a region, 99.90%). Good for in-region analytics transcoding.

-

Nearline (Accessed less than once a month, 99.00%). Long-tail content, backups.

-

Coldline (Accessed less than once a year, 99.00%). Archiving, disaster recovery purposes.

-

Online transfer (using command-line tools like 'gsutil')

-

Storage transfer service (scheduled and managed batch transfers)

-

Transfer appliance, beta (rackable appliance to securely ship your data). You can transfer up to a Petabyte of data that way. The closest analog is a Snowball service at AWS.

NOTE: A disk can be resized even when it attached to the VM. You can grow disk for the compute instance but never shrink it. You can edit edit a persistent disk, increase size and increase IOPS capacity.

Quiz:

-

Your Cloud Storage objects live in buckets. Which of these characteristics do you define on a per-bucket basis? Choose all that are correct (3 correct answers). A geographic location. A default storage class. A globally-unique name.

-

Cloud Storage is well suited to providing the root file system of a Linux virtual machine. False.

-

Why would a customer consider the Coldline storage class? To save money on storing infrequently accessed data.

-

What is a fundamental difference between a snapshot of a boot persistent disk and a custom image? A snapshot is locked within a project, but a custom image can be shared between projects.

-

What happens when a custom image is marked "Obsolete"? No new projects can use the custom image, but those already with the image can continue to use it.

-

From where can you import boot disk images for Compute Engine (select 3)?

-

Virtual machines on your local workstation

-

Your physical datacenter

-

Virtual machines that run on another cloud platform

Cloud BigTable and Cloud Datastore

Quiz:

-

Each table in NoSQL databases, such as Cloud Bigtable, has a single schema that is enforced by the database engine itself. False.

-

Some developers think of Cloud Bigtable as a persistent hashtable. What does that mean? Each item in the database can be sparsely populated and is looked up with a single key.

-

How are Cloud Datastore and Cloud Bigtable alike? Choose all that are correct (2 correct answers). They are both NoSQL databases. They are both highly scalable.

-

Cloud Datastore databases can span App Engine and Compute Engine applications. True.

Cloud SQL and Cloud Spanner

-

Automatic replication between multiple zones

-

Horizontal scaling (read)

-

Google security (customer data is encrypted, including backups)

-

Compute instances can be authorized to use Cloud SQL service and configure it to be in the same zone

Quiz:

-

Which database service can scale to higher database sizes? Cloud Spanner can scale to petabyte database sizes, while Cloud SQL is limited by the size of the database instances you choose. At the time this quiz was created, the maximum was 10,230 GB.

-

Which database service presents a MySQL or PostgreSQL interface to clients? Each Cloud SQL database is configured at creation time for either MySQL or PostgreSQL. Cloud Spanner uses ANSI SQL 2011 with extensions.

-

Which database service offers transactional consistency on a global scale? Cloud Spanner.

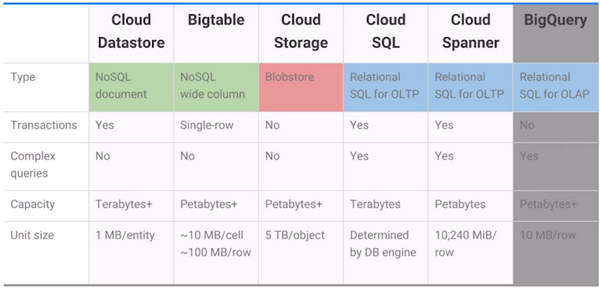

Comparing storage options:

-

Consider using Cloud Datastore if you need to store unstructured objects or if you require support for transactions and SQ like queries. This storage service provides terabytes of capacity with a maximum unit size of one megabyte per entity. It is the best for semi-structured application data that is used in app engines applications.

-

Consider using Cloud Bigtable if you need to store a large number of structured objects. Cloud Bigtable does not support SQL queries, nor does it support multi-row transactions. This storage service provides petabytes of capacity with a maximum unit size of 10 Megabytes per cell and 100 Megabytes per row. It is best for analytical data with heavy read/write events like AdTech, Financial, or IoT data.

-

Consider using Cloud Storage if you need to store immutable blobs larger than 10 megabytes, such as large images or movies. This storage service provides petabytes of capacity with a maximum unit size of five terabytes per object. It is best for structured and unstructured, binary or object data like images, large media files, and backups

-

Consider using Cloud SQL or Cloud Spanner if you need full SQL support for an online transaction processing system. Cloud SQL provides terabytes of capacity, while Cloud Spanner provides Petabytes. If Cloud SQL does not fit your requirements because you need horizontal scalability, not just through the replicas, consider using Cloud Spanner. Cloud Spanner is the best for web frameworks and in existing applications like storing user credentials and customer orders. Cloud Spanner is best for large scale database applications that are larger than two terabytes, for example, for financial trading and e-commerce use cases

-

The usual reason to store data in BigQuery is to use its big data analysis and interactive query and capabilities. You would not want to use BigQuery, for example, as the backings store for an online application

Quiz:

-

You are developing an application that transcodes large video files. Which storage option is the best choice for your application? Cloud Storage.

-

You manufacture devices with sensors and need to stream huge amounts of data from these devices to a storage option in the cloud. Which Google Cloud Platform storage option is the best choice for your application? Cloud Bigtable.

-

Which statement is true about objects in Cloud Storage? They are immutable, and new versions overwrite old unless you turn on versioning.

-

You are building a small application. If possible, you'd like this application's data storage to be at no additional charge. Which service has a free daily quota, separate from any free trials? Cloud Datastore.

-

How do the Nearline and Coldline storage classes differ from Multi-regional and Regional? Choose all that are correct (2 responses). Nearline and Coldline assess lower storage fees. Nearline and Coldline assess additional retrieval fees.

-

Your application needs a relational database, and it expects to talk to MySQL. Which storage option is the best choice for your application? Cloud SQL.

-

Your application needs to store data with strong transactional consistency, and you want seamless scaling up. Which storage option is the best choice for your application? Cloud Spanner.

-

Which GCP storage service is often the ingestion point for data being moved into the cloud, and is frequently the long-term storage location for data? Cloud Storage.

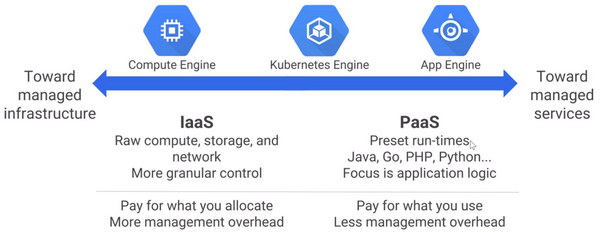

Containers

Another choice for organizing your compute is using Containers rather than Virtual Machines and using Kubernetes Engine to manage them. Containers are simple and interoperable, and they enable seamless fine-grained scaling:

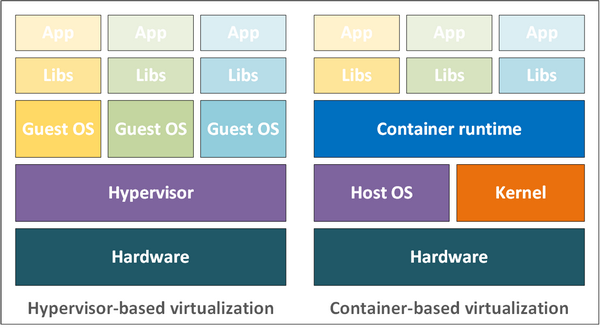

Container-based virtualization is an alternative to hardware virtualization as in traditional virtual machines. Virtual machines are isolated from one another in part by having each virtual machine have its own instance of the operating system. But, operating systems can be slow to boot and can be resource-heavy. Containers respond to these problems by using modern operating systems, built-in capabilities to isolate environments from one another:

-

Consistency across development, testing, and production environments

-

Loose coupling between application and operating system layers (containers are easier to move around as they don't have host OS installed in them)

-

Workload migration simplified between on-premises and cloud environments

-

Agile development and operations

Quiz:

-

Each container has its own instance of an operating system. False.

-

Containers are loosely coupled to their environments. What does that mean? Choose all the statements that are true. (3 correct answers):

-

Deploying a containerized application consumes fewer resources and is less error-prone than deploying an application in virtual machines

-

Containers abstract away unimportant details of their environments

-

Containers are easy to move around

Kubernetes

Quiz 1:

-

What is a Kubernetes Pod? In Kubernetes, a group of one or more containers is called a pod. Containers in a pod are deployed together. They are started, stopped, and replicated as a group. The simplest workload that Kubernetes can deploy is a pod that consists only of a single container.

-

What is a Kubernetes cluster? A Kubernetes cluster is a group of machines where Kubernetes can schedule containers in pods. The machines in the cluster are called “nodes.”

Quiz 2:

-

Where do the resources used to build Kubernetes Engine clusters come from? Because the resources used to build Kubernetes Engine clusters come from Compute Engine, Kubernetes Engine takes advantage of Compute Engine’s and Google VPC’s capabilities.

-

Google keeps Kubernetes Engine refreshed with successive versions of Kubernetes. True. The Kubernetes Engine team periodically performs automatic upgrades of your cluster master to newer stable versions of Kubernetes, and you can enable automatic node upgrades too.

-

Identify two reasons for deploying applications using containers. (Choose 2 responses.) Simpler to migrate workloads. Consistency across development, testing, production environments.

-

Kubernetes allows you to manage container clusters in multiple cloud providers. True.

-

Google Cloud Platform provides a secure, high-speed container image storage service for use with Kubernetes Engine. True.

-

In Kubernetes, what does "pod" refer to? A group of containers that work together.

-

Does Google Cloud Platform offer its own tool for building containers (other than the ordinary docker command)? Yes, the GCP-provided tool is an option, but customers may choose not to use it.

-

Where do your Kubernetes Engine workloads run? In clusters built from Compute Engine virtual machines.

Kubernetes is my favorite area, and even though besides the cert preparation, I can talk about it for hours, I have to stop here. I will be releasing the second part of this article soon to make the reading less condensed and overwhelming.

Ciao and see you soon, stay tuned.

References:

- Most of the images provided courtesy of Google (GCP documentation & additional learning materials)

- Solutions list for GCP: https://cloud.google.com/solutions/mobile/mobile-gaming-analysis-telemetry

- A good collection of links on GitHub: https://github.com/agasthik/GoogleCloudArchitectProfessional

Comments